What is Reinforcement Learning?¶

In its literal sense¶

Reinforcement: It is defined as a consequence that follows a behavior that increases (or attempts to increase) the likelihood of that response occurring in the future[source].

There are two types of reinforcement, known as positive reinforcement and negative reinforcement; positive is where a reward is offered on expression of the wanted behaviour and negative is taking away an undesirable element (or giving penalty), whenever the desired behaviour is achieved.

Learning: Acquiring knowledge and skills and having them readily available from memory so you can make sense of future problems and opportunities[source].

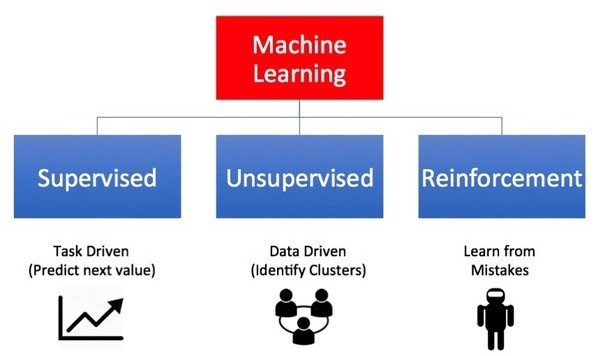

RL within ML paradigm¶

“A computer program is said to learn from experience E with respect to some task T and some performance P, if its performance on T, as measured by P, improves with experience E.”

- Mitchell 1997

Fig. 3 Broad types of ML¶

Fitting RL into this definition gives,

Task (T)

Decision making strategy

Performance (P)

Cumulative rewards

Experience (E)

Interaction with environment/system

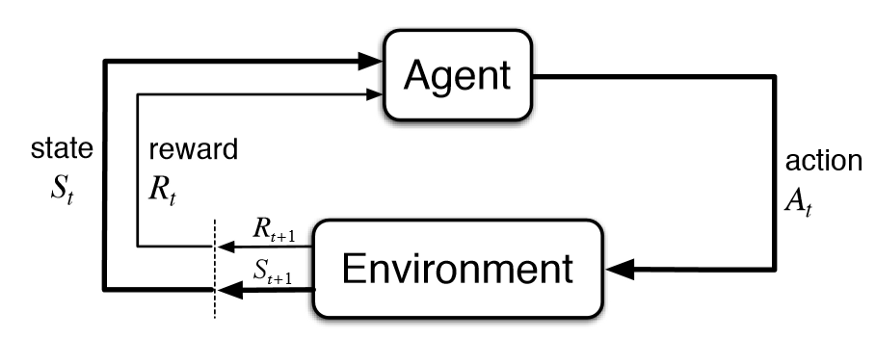

The fundamental block of RL¶

Reinforcement Learning (RL) is the science of decision making for a pre-defined environment - mapping situations to actions - maximizing a numerical reward signal.

Fig. 4 A fundamental RL block¶

A typical formulation as follows can be considered,

Observe current state of environment,

, and current reward

Agent decides on the action,

Agent performs action, through interaction with environment

Environment shifts into the new state,

Reward feedback,

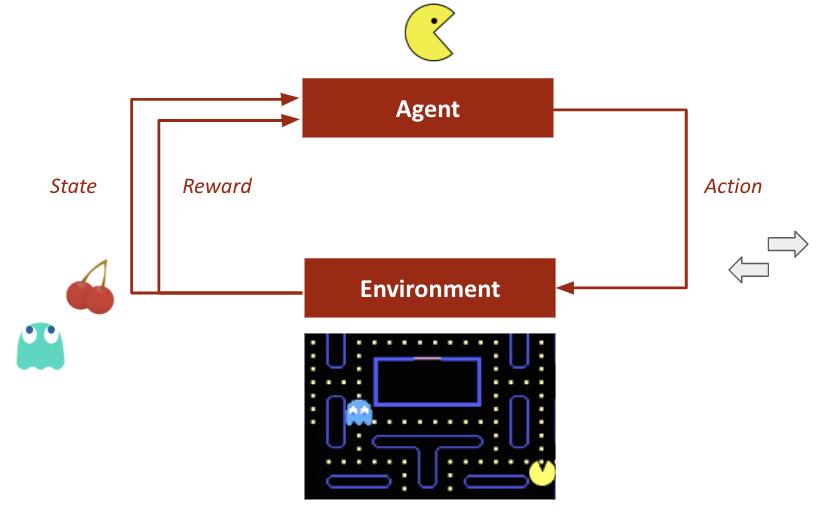

Pacman as an RL example¶

Fig. 5 Pacman as an RL example¶

Pacman is one of the most commonly known arcade games, which almost everyone has played at least once. Using it as an RL example,

State Space

: All possible configurations in the game, including the positions of the points, positions of the ghosts, and positions of the player, etc.

Action Space

: A set of all allowed actions {left, right, up, down, or stay}.

Policy

: A mapping from the state space to the action space.

The objective of the player is to take a series of optimal actions to maximize the total reward that it could obtain at the end of the game.[source]

Using RL on OpenAI Gym Atari¶

Fig. 6 RL on Atari environments¶

RL algorithms can be used on different types of atari game environments, where the environment definitions, player objectives, allowable actions, etc are all distinct.

RL Agent learns to walk¶

Fig. 7 RL Agent learns to walk¶

Against simple decision making of an arcade game, RL agents can learn even highly complex tasks, like bipedal walking, though it might take much longer to train.

Many Faces of RL¶

RL finds application at the intersection of various disciplines and fields as illustrated below,

Fig. 8 Many Faces of RL¶

Distinctive Characteristics of RL¶

What makes RL different from other ML paradims?

Not strictly supervised as instead of pre-labelled data, the data is generated through interaction with environment.

Unlike unsupervised, the goal isn’t finding relationships within data, but maximize some reward function.

Reward feedback determines the quality of performance.

Time is a key component, as process is sequential (non i.i.d data) with delayed feedback.

Each action agent makes affects the subsequent data it receives.

Summarizing¶

RL is a science of learning to make decisions from interaction with environment.

Solving any problem through RL makes us think about,

time

long-term action consequences

effective and efficient gathering of experience

predicting the future states

dealing with uncertainty